Why medical technology often doesn’t make it from drawing board to hospital – StartupSmart

If there’s something wrong with your brain, how do you spot that in an MRI?

Of course, if it’s something obvious, such as a major aneurysm or a tumour, anyone can see it. But what if it’s something more subtle, such as a neural pathway that is more deteriorated than normal?

This might be hard to spot by simply looking at an image. However, there is a range of medical image analysis software that can detect something like this.

You may take a diffusion-weighted MRI – a type of MRI that displays white matter extremely well (think of white matter as the neural roadways that connect areas of grey matter).

Then, after processing that MRI, you can use tractography to view the white matter road system as a 3D computer model. You can then measure deterioration across these white matter pathways by looking at a measurement called the fractional anisotropy.

After someone uses a software tool to bend the image of your brain to a standard shape, its fractional anisotropy can be compared to a database of hundreds of other diffusion-weighted MRIs to find any abnormalities.

But that probably won’t happen in a hospital. All of the methods described above exists in the research world – but in the clinical world, a radiologist will likely just eyeball your MRI and make a diagnosis based on that.

Why? One major reason is that this software is only really usable by experts in the research world, not clinicians.

The incentives to create medical imaging software are considerable, but the incentives to improve it to a final product – in the way that, say, Microsoft is organically inclined to improve its products after user feedback – are nonexistent.

If your operating system crashes, Microsoft has the resources and infrastructure to debug it and put that into the next release. But science labs are barely inclined, funded, or skilled enough (from a software engineering perspective) to improve their software after an initial release.

Beta or worse

FSL, a software toolbox created by Oxford for analysing MRIs, is one of the best of its kind out there. Virtually every medical imaging researcher uses it – and yet, with a graphical user interface that resembles something from the 1990s (plagued with hard-to-follow acronyms) such an item would be impossible or dangerous for the average clinician to use without six months of training.

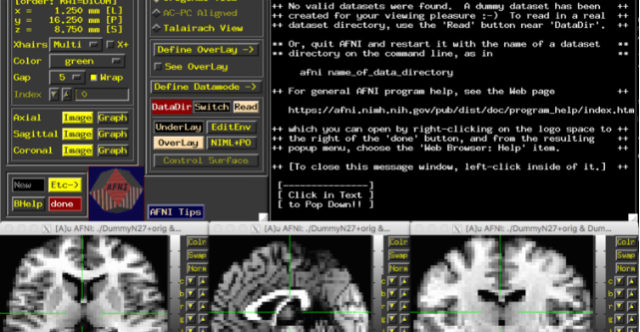

Most researchers don’t even bother to use its graphical user interface, even when they’re just starting to learn it, instead opting to use it as a command-line tool (that is to say, entirely text-based – virtually all computers were like this before the early 1980s). This is not an anomaly. AFNI, another image analysis package, is worse; upon start up, five windows pop up immediately and start flashing like an old GeoCities site.

In some cases, problems with research software can go beyond the user’s learning curve – and those problems are less obvious and more dangerous when not caught.

Both AFNI and FSL suffered from a bug that risked invalidating 40,000 fMRI studies from the past 15 years. Such bugs, unaccounted for, could further inhibit the potential use of research software in clinics.

No business model

Why do people still use this software despite all this?

Well, in the vast majority of cases, they do work. But the incentives to improve them enough to make them easier to use – or simply to make better products – are not there.

Apple makes a profit off its computers and so is inclined to constantly improve them and make them as easy to use as possible. If they don’t do this, people will just go to Windows, a product that essentially does the same thing.

But while you or I will pay money for a computer, FSL is open source and scientists pay nothing for it. The monetary incentive, rather, comes from the publications resulting from using this software – which can lead to grant funding for further research.

Neurovault, an open library for MRIs used in previous research studies, requests in its FAQs that researchers cite the original paper about Neurovault if they make any new discoveries with it, so that Neurovault can obtain more grant money to continue its work.

Databases such as Neurovault are excellent and very necessary initiatives – but do you notice Google asking people to cite its original Pagerank algorithm paper if they make a discovery from Google?

Even Kitware, a medical imaging software company, is totally open-source and makes its money from grants and donations, rather than by selling its software and actively seeking feedback from users. Kitware has better graphical user interfaces, but it’s still essentially a research company; most of its tools would still not be suitable to use without several months of specialised training.

Medical imaging is a brilliant field filled with brilliant minds, but the incentives to drive its proof-of-concepts into final products, suitable for use by clinicians instead of just researchers, are not in place yet. While this remains the case, the road from the lab to the hospital will continue to be stagnant.

Matthew Leming is a PhD candidate in Psychiatry at the University of Cambridge.

This article was originally published on The Conversation. Read the original article.

Follow StartupSmart on Facebook, Twitter, LinkedIn and iTunes.